Linux下的如何快速安装Hadoop

大家都对大数据感兴趣,但是大家都没有想去如何实践到地方,如何落实去学习Hadoop,我们学习任何一门技术的时候,都不用想,上来肯定是去安装,然后去实践,不得不说,现在你去网上搜索,如何安装Hadoop,那很多出来的都是从 Unbutu 系统下如何安装,很多也都讲解的不是很清楚,阿粉也比较想学习,所以就准备了如何安装 Hadoop 的 Linux 的教程,大家上手就能学习。阿粉就开始给大家写一个安装 Hadoop 的教程。

准备工作

1.我们首先可以去阿里云或者华为云去租用一台服务器,毕竟一个初级版本的服务器,也没有那么贵,阿粉还是用的之前租用的那台,我们选择安装 Linux8 的版本,如果是本机的话,你需要下载 CentOS8 的镜像,然后通过虚拟机安装到 VM 上就可以了,安装完成我们就可以开始安装 Hadoop 了

我们先说说 Hadoop 都能干啥,以及人们经常对 Hadoop 误解。

Hadoop主要是分布式计算和存储的框架,所以Hadoop工作过程主要依赖于HDFS(Hadoop Distributed File System)分布式存储系统和Mapreduce分布式计算框架。

但是很多人就会对 Hadoop 产生一个误解,有些非常捧 Hadoop 的人就会说,Hadoop 什么东西都可以做,实际上不是的,每一项技术的出现,都是对应着解决不同的问题的,比如我们接下来要学习的 Hadoop 。Hadoop适合来做数据分析,但是绝对不是 BI ,传统 BI 是属于数据展现层(Data Presentation),Hadoop就是专注在半结构化、非结构化数据的数据载体,跟BI是不同层次的概念。

还有人说 Hadoop 就是 ETL ,就相当于数据处理,但是,Hadoop 并不是一个绝对意义上的 ETL 。

安装 Hadoop 教程

1.安装SSH

yum install openssh-server

OpenSSH是Secure Shell的一个开源实现,OpenSSH Server安装完成后在/etc/init.d目录下应该会增加一个名为sshd的服务,一会我们就要把生成的密钥放到指定位置,然后用来当作之后的身份验证。

2.安装 rsync

yum -y install rsync

3.产生 SSH 密钥之后继续进行后续的身份验证

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

4.把产生的密钥放入许可文件中

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

安装Hadoop

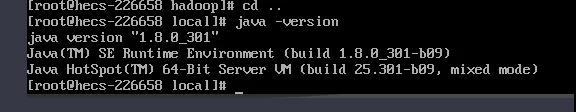

安装 Hadoop 之前我们要先把 JDK 安装好,配置好环境变量,出现下面这个样子,就说明 JDK 已经安装完成了。

1.解压Hadoop

我们先要把 Hadoop 放到我们的服务器上,就像阿粉这个样子,

然后解压 tar zxvf hadoop-3.3.1.tar.gz

2.修改bashrc文件

vim ~/.bashrc

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ export HADOOP_HOME=/usr/local/hadoop export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_OPTS="-DJava.library.path=$HADOOP_HOME/lib" export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

复制到文件中保存退出

3.生效文件

source ~/.bashrc

4.修改配置文件 etc/hadoop/core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<!-- 缓存存储路径 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/app/hadooptemp</value>

</property>

5.修改 etc/hadoop/hdfs-site.xml

<!-- 默认为3,由于是单机,所以配置1 -->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!-- 配置http访问地址 -->

<property>

<name>dfs.http.address</name>

<value>0.0.0.0:9870</value>

</property>

6.修改 etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.212.b04-0.el7_6.x86_64

7.修改etc/hadoop/yarn-env.sh文件

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.212.b04-0.el7_6.x86_64

8.修改sbin/stop-dfs.sh文件,在顶部增加

HDFS_NAMENODE_USER=root HDFS_DATANODE_USER=root HDFS_SECONDARYNAMENODE_USER=root YARN_RESOURCEMANAGER_USER=root YARN_NODEMANAGER_USER=root

9-1.修改start-yarn.sh 文件

YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

9-2.修改stop-yarn.sh文件

YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

上面的这些命令主要是用于当你启动 Hadoop 的时候,会提示认证不通过。

10.格式化,进入hadoop的bin文件夹,执行下面的命令

./hdfs namenode -format

11.进入sbin文件夹,启动hadoop

./start-dfs.sh

也可以直接全部启动 ./start-all.sh

然后直接访问8088端口即可

12.防火墙开启端口,如果用的云服务器,请将9870端口加入安全组出入口

//添加9870端口到防火墙 firewall-cmd --zone=public --add-port=9870/tcp --permanent //重启防火墙 firewall-cmd --reload

13.输入 jps 如果是如果是4个或者5个就配置成功,再继续

通过web访问hadoop,访问地址:http://IP地址:9870

当我们看到这个的时候,说明我们已经安装成功了。注意,Hadoop3.x 版本的看 Hadoop Web端的端口没有变化,但是 HDFS 端 则由 50070 变成了 9870 这个需要注意一下呦,你学会了么?

相关文章

-

在安装dubbo-admin之前确保你得linux服务器上已经成功安装了jdk,tomcat,本文重点给大家介绍linux系统下安装dubbo-admin的详细过程,需要的朋友参考下吧2021-08-24

在安装dubbo-admin之前确保你得linux服务器上已经成功安装了jdk,tomcat,本文重点给大家介绍linux系统下安装dubbo-admin的详细过程,需要的朋友参考下吧2021-08-24 -

如何双启动Win11和Linux系统?Win11 和 Linux双系统安装教程

如何双启动Win11和Linux系统?今天小编就为大家带来了Win11 和 Linux双系统安装教程,需要的朋友一起看看吧2021-08-16 -

微软 Win11/Win10 一个命令安装 Windows Linux 子系统(WSL)

微软 Win11/Win10 一个命令安装 Windows Linux 子系统(WSL),下文小编就为大家带来了详细介绍,一起看看吧2021-08-01 -

一站式智能建模软件,该软件建模流程完全自动进行,一键式即可建模,本站提供的是这款软件的linux安装版本2022-02-13

一站式智能建模软件,该软件建模流程完全自动进行,一键式即可建模,本站提供的是这款软件的linux安装版本2022-02-13 -

CentOS 6.6系统怎么安装?CentOS Linux系统安装配置图解教程

CentOS系统怎么安装?今天小编就为大家带来了CentOS Linux系统安装配置图解教程,需要的朋友可以一起看看2021-06-28 -

Kvrocks(开源美图键值数据库)V2.0.1 linux安装版

该软件是基于rocksdb并与Redis协议,该数据库可以更好的降低内存,提高复制和存储,本站提供的是这款软件的linux版本2021-06-03 -

Geth(以太坊挖矿软件) v1.10.3 官方安装版 Win/Linux/Mac

Geth是一款非常不错的原始以太坊挖矿软件,geth为您提供了一个钱包,还允许您查看区块的历史记录,创建合约以及在不同地址之间进行资金转账。原始开发人员还不断发布错误更2021-05-10 -

全称奥联加密邮件专用客户端软件,支持增强认证、邮件加密的传输、邮件加密存储、数字签名等安全功能,防止邮件终端泄密,本站提供的是这款软件的linux安装版本2021-04-21

全称奥联加密邮件专用客户端软件,支持增强认证、邮件加密的传输、邮件加密存储、数字签名等安全功能,防止邮件终端泄密,本站提供的是这款软件的linux安装版本2021-04-21 -

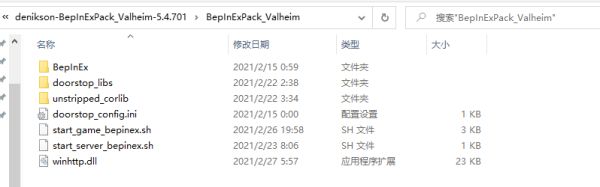

Valheim英灵神殿并没有官方的服务器,玩家们只能通过搭建服务器来获得一个稳定的房间,但是云服务器安装MOD并不想在本地那么方便,下面请看“Yardbi”分享的Valheim英灵神2021-03-05

Valheim英灵神殿并没有官方的服务器,玩家们只能通过搭建服务器来获得一个稳定的房间,但是云服务器安装MOD并不想在本地那么方便,下面请看“Yardbi”分享的Valheim英灵神2021-03-05 -

统信UOS系统安装怎么百度Linux输入法?有的对此可能还不太清楚,下文中为大家带来了UOS下百度Linux输入法安装方法。感兴趣的朋友不妨阅读下文内容,参考一下吧2021-02-25

统信UOS系统安装怎么百度Linux输入法?有的对此可能还不太清楚,下文中为大家带来了UOS下百度Linux输入法安装方法。感兴趣的朋友不妨阅读下文内容,参考一下吧2021-02-25

最新评论